Dev Mapper C

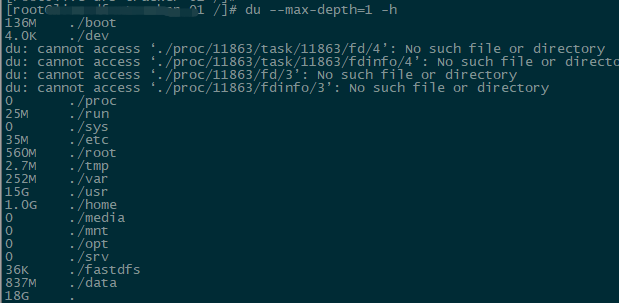

The device mapper is a framework provided by the Linux kernel for mapping physical block devices onto higher-level virtual block devices. It forms the foundation of the logical volume manager, software RAIDs and dm-crypt disk encryption, and offers additional features such as file system snapshots. Device mapper works by passing data from a virtual block device, which is provided by the device mapper itself, to another block device. Data can be also modified in transition, which is performed, fo. root@ap-dcr-ctc01 log# sudo vgs VG #PV #LV #SN Attr VSize VFree rhel 2 3 0 wz-n- 298.99g 0 As you can see the space directory of rhel-root is full so I would like to increase the space of its directory. The device mapper, like the rest of the Linux block layer deals with things at the sector level. A sector defined as 512 bytes, regardless of the actual physical geometry the the block device. All formulas and values to the device mapper will be in sectors unless otherwise stated.

The create command activates a new device mapper device. It appears in /dev/mapper. In addition, if the target has metadata, it reads it, or if this its first use, it initializes the metadata devices. Note the prior device mapper devices can be passed as parameters (if the target takes a device), thus it is possible to 'stack' them. A Beginner's Guide To LVM - Page 3 Until now we have three logical volumes, but we don't have any filesystems in them, and without a filesystem we can't save anything in them. Therefore we create an ext3 filesystem in share, an xfs filesystem in backup, and a reiserfs filesystem in media.

-->Use the Device Mapper storage driver Estimated reading time: 28 minutes Device Mapper is a kernel-based framework that underpins many advanced volume management technologies on Linux. Docker’s devicemapper storage driver leverages the thin provisioning and snapshotting capabilities of this framework for image and container management. Thanks mzhaase,I am trying to understand the logical disk layout a bit more, cfdisk won't list the current partitions as they are GPT, I suspect that LVM is creating the device mapper entries (?), but the device mapper commands and the output of parted (above) are confusing - for example fdisk, cfdisk tell me to use parted as the partitions are GPT, and parted lists them as loop devices.

This article is a step-by-step process for how to perform Logical Volume Management (LVM) and RAID on encrypted devices. The process applies to the following environments:

- Linux distributions

- RHEL 7.6+

- Ubuntu 18.04+

- SUSE 12+

- Azure Disk Encryption single-pass extension

- Azure Disk Encryption dual-pass extension

Scenarios

The procedures in this article support the following scenarios:

- Configure LVM on top of encrypted devices (LVM-on-crypt)

- Configure RAID on top of encrypted devices (RAID-on-crypt)

After the underlying device or devices are encrypted, then you can create the LVM or RAID structures on top of that encrypted layer.

The physical volumes (PVs) are created on top of the encrypted layer. The physical volumes are used to create the volume group. You create the volumes and add the required entries on /etc/fstab.

In a similar way, the RAID device is created on top of the encrypted layer on the disks. A file system is created on top of the RAID device and added to /etc/fstab as a regular device.

Considerations

We recommend that you use LVM-on-crypt. RAID is an option when LVM can't be used because of specific application or environment limitations.

You'll use the EncryptFormatAll option. For more information about this option, see Use the EncryptFormatAll feature for data disks on Linux VMs.

Dev Mapper Centos-root Full

Although you can use this method when you're also encrypting the OS, we're just encrypting data drives here.

The procedures assume that you already reviewed the prerequisites in Azure Disk Encryption scenarios on Linux VMs and in Quickstart: Create and encrypt a Linux VM with the Azure CLI.

The Azure Disk Encryption dual-pass version is on a deprecation path and should no longer be used on new encryptions.

General steps

When you're using the 'on-crypt' configurations, use the process outlined in the following procedures.

Note

We're using variables throughout the article. Replace the values accordingly.

Deploy a VM

The following commands are optional, but we recommend that you apply them on a newly deployed virtual machine (VM).

PowerShell:

Azure CLI:

Attach disks to the VM

Repeat the following commands for $N number of new disks that you want to attach to the VM.

PowerShell:

Azure CLI:

Verify that the disks are attached to the VM

PowerShell:

Azure CLI:

Portal:

OS:

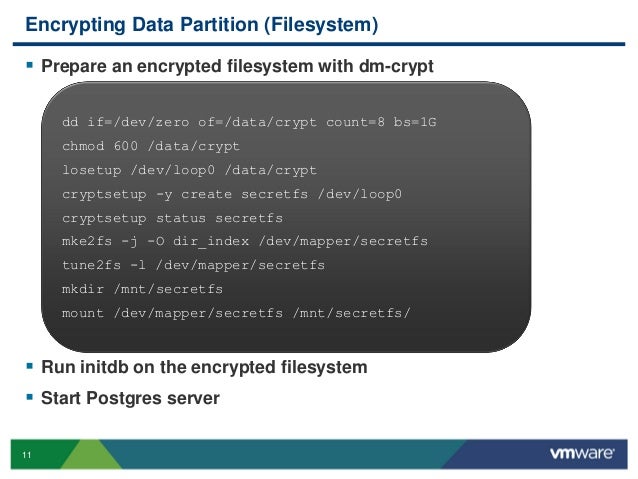

Configure the disks to be encrypted

This configuration is done at the operating system level. The corresponding disks are configured for a traditional encryption through Azure Disk Encryption:

- File systems are created on top of the disks.

- Temporary mount points are created to mount the file systems.

- File systems are configured on /etc/fstab to be mounted at boot time.

Check the device letter assigned to the new disks. In this example, we're using four data disks.

Create a file system on top of each disk

This command iterates the creation of an ext4 file system on each disk defined on the 'in' part of the 'for' cycle.

Find the universally unique identifier (UUID) of the file systems that you recently created, create a temporary folder, add the corresponding entries on /etc/fstab, and mount all the file systems.

This command also iterates on each disk defined on the 'in' part of the 'for' cycle:

Verify that the disks are mounted properly

Also verify that the disks are configured:

Encrypt the data disks

PowerShell using a key encryption key (KEK):

Azure CLI using a KEK:

Verify the encryption status

Continue to the next step only when all the disks are encrypted.

PowerShell:

Azure CLI:

Portal:

OS level:

The extension will add the file systems to /var/lib/azure_disk_encryption_config/azure_crypt_mount (an old encryption) or to /etc/crypttab (new encryptions).

This file will take care of activating these disks during the boot process so that LVM or RAID can use them later.

Don't worry about the mount points on this file. Azure Disk Encryption will lose the ability to get the disks mounted as a normal file system after we create a physical volume or a RAID device on top of those encrypted devices. (This will remove the file system format that we used during the preparation process.)

Remove the temporary folders and temporary fstab entries

You unmount the file systems on the disks that will be used as part of LVM.

And remove the /etc/fstab entries:

Verify that the disks are not mounted and that the entries on /etc/fstab were removed

And verify that the disks are configured:

Steps for LVM-on-crypt

Now that the underlying disks are encrypted, you can create the LVM structures.

Instead of using the device name, use the /dev/mapper paths for each of the disks to create a physical volume (on the crypt layer on top of the disk, not on the disk itself).

Configure LVM on top of the encrypted layers

Create the physical volumes

You'll get a warning that asks if it's OK to wipe out the file system signature. Continue by entering y, or use echo 'y' as shown:

Note

The /dev/mapper/device names here need to be replaced for your actual values based on the output of lsblk.

Verify the information for physical volumes

Create the volume group

Create the volume group by using the same devices already initialized:

Check the information for the volume group

Dev Mapper Centos

Create logical volumes

Check the created logical volumes

Create file systems on top of the structures for logical volumes

Create the mount points for the new file systems

Add the new file systems to /etc/fstab and mount them

Verify that the new file systems are mounted

/dev/mapper/centos-root

On this variation of lsblk, we're listing the devices showing the dependencies in reverse order. This option helps to identify the devices grouped by the logical volume instead of the original /dev/sd[disk] device names.

It's important to make sure that the nofail option is added to the mount point options of the LVM volumes created on top of a device encrypted through Azure Disk Encryption. It prevents the OS from getting stuck during the boot process (or in maintenance mode).

If you don't use the nofail option:

- The OS will never get into the stage where Azure Disk Encryption is started and the data disks are unlocked and mounted.

- The encrypted disks will be unlocked at the end of the boot process. The LVM volumes and file systems will be automatically mounted until Azure Disk Encryption unlocks them.

You can test rebooting the VM and validate that the file systems are also automatically getting mounted after boot time. This process might take several minutes, depending on the number and sizes of file systems.

Reboot the VM and verify after reboot

Steps for RAID-on-crypt

Now that the underlying disks are encrypted, you can continue to create the RAID structures. The process is the same as the one for LVM, but instead of using the device name, use the /dev/mapper paths for each disk.

Configure RAID on top of the encrypted layer of the disks

Note

The /dev/mapper/device names here need to be replaced with your actual values, based on the output of lsblk.

Check/monitor RAID creation

Create a file system on top of the new RAID device

Create a new mount point for the file system, add the new file system to /etc/fstab, and mount it:

Dev Mapper C 9

Verify that the new file system is mounted:

It's important to make sure that the nofail option is added to the mount point options of the RAID volumes created on top of a device encrypted through Azure Disk Encryption. It prevents the OS from getting stuck during the boot process (or in maintenance mode).

If you don't use the nofail option:

- The OS will never get into the stage where Azure Disk Encryption is started and the data disks are unlocked and mounted.

- The encrypted disks will be unlocked at the end of the boot process. The RAID volumes and file systems will be automatically mounted until Azure Disk Encryption unlocks them.

You can test rebooting the VM and validate that the file systems are also automatically getting mounted after boot time. This process might take several minutes, depending on the number and sizes of file systems.

And when you can log in: